Introduction

Teachers continuously utilize important information about students’ abilities and skills to make pedagogical decisions and inform instructional practices. Effective educators draw information from a wide variety of sources to guide teaching practices, with the ultimate goal of improving student learning. Crafting successful academic interventions requires teachers to identify underperforming students early enough to provide them with instructional support, but collecting and aggregating student data from varied and multiple sources can be a laborious and time-consuming task. Teacher-facing dashboards containing student-level data have been shown to serve as a useful and time-saving tool for helping teachers address these obstacles and determine appropriate actions for their students (Molenaar & Knoop-van Campen, 2017).

Although online educational environments provide students with flexible learning schedules, the self-paced nature of the courses creates an additional layer of complexity for teachers. One of the challenges of working in a digital learning environment is the ability to monitor student activities. This is perhaps most critical for students who have either encountered a learning difficulty or ceased to be engaged. In a self-paced curriculum, interventions must be tailored to each student’s personal goals and time constraints. Ideally, this process would locate at-risk students before they reach the critical point at which they begin struggle or cease to be engaged. Teachers must also strategically plan around their own time limitations, which often requires them to prioritize the needs of students at the greatest academic risk.

The use of technology can assist in making relevant data readily accessible to teachers to inform classroom instruction. Creating dashboards involves the aggregation of complex data structures that captures and displays student information in a way that is meaningful and easy to use. However, designing effective teacher awareness tools is challenging. Designers must anticipate the potential effects of analytics on teacher behavior, and how these changes in behavior will ultimately influence student learning.

Relying on learning analytics and machine learning to guide the implementation of teacher-facing dashboards can be critical in designing an effective teacher awareness tool. Learning analytics involves the collection and analysis of data about learners within academic settings in order to understanding and improve learning and the environments in which it occurs (Long & Siemens, 2011). Once obtained, this information can be utilized in predictive systems that rely on machine learning, or the automated detection of meaningful patterns in data (Shalev-Shwarts, & Ben-David, 2014). When used together, learning analytics and machine learning can be used to conglomerate highly dimensional data to classify or predict concepts or events. The combination of these tools can prove invaluable in summarizing data into succinctly understood and actionable insights for teachers.

With the emergence of fully digital education, learning analytics not only becomes technically feasible, but potentially a powerful tool for teachers to understand their students’ progress and challenges. Utilizing techniques within the emerging fields of learning analytics and machine learning, StrongMind implemented teacher-facing dashboards to empower teachers in making data-driven instructional decisions. This report summarizes the findings of a study that investigated the extent to which the use of dashboards by teachers influenced the educational outcomes of students.

Background

StrongMind curriculum was developed to facilitate digital learning by providing students the flexibility to tackle content at their own pace and in a manner that works with their individual needs. Although teachers often provide students with expectations of course pacing, students ultimately choose the rate at which they progress through the course. Thus, on any given day, students may be in parts of a course that differ from the teacher’s expectation. Despite this, teachers must still plan for individual and group instruction that is both relevant and strategic. To meet these needs, teachers were required to aggregate large amounts of data from multiple locations. Gathering the necessary data was time-intensive and a hinderance to teacher workflows. In order to address this problem, a dashboard was created to serve as a centralized location for student data.

Purpose of the study

Efforts to design and develop tools such as teacher-facing dashboards are often driven by the assumption that these tools will improve teaching efforts, and ultimately, improve student academic outcomes. However, research supporting these assumptions is scarce (Rodriguez-Triana et al., 2017). Additionally, previous research regarding machine learning techniques being applied to education data has been utilized primarily to predict student pass/fail, withdrawal, and retention rates (Vitielllo et al., 2017). This study was undertaken to address a gap in the literature related to the impacts of teacher-facing dashboards on student academic performance.

Research Questions

The following research questions were examined:

- What effect did the introduction of a teacher dashboard have on student academic outcomes?

- What effect did the introduction of a teacher dashboard have on the proportion of students who were disengaged in their course?

Methods

Dashboard Creation and Implementation

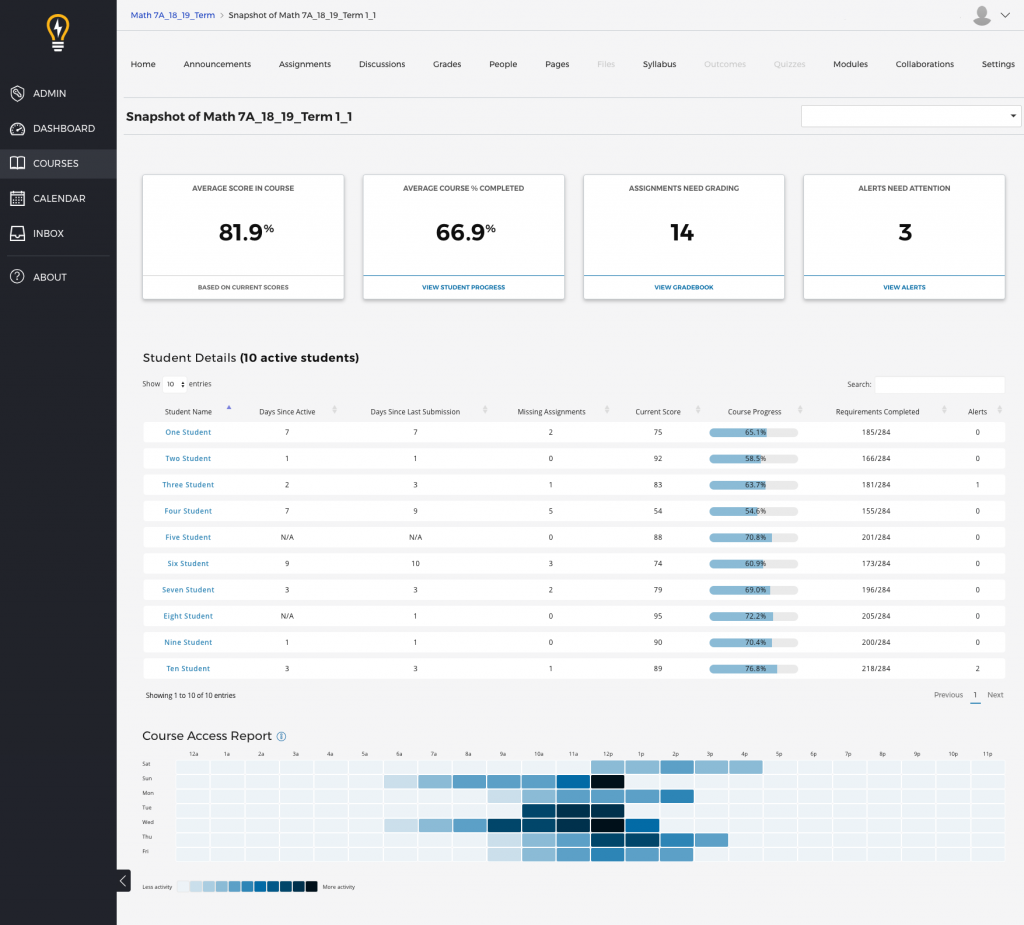

In-depth interviews were conducted with teaching staff of the fully online public school selected to participate in the research study. The interviews were designed to identify which data elements would be most helpful to teachers in their planning needs and educational interventions. Based on the feedback provided by the teachers, StrongMind engineering teams conceptualized and built the teacher dashboard, which included data points related to student progress, performance, engagement, and educational plans.

The dashboard was developed in the early summer of 2016 as part of a larger Learning Management System (LMS) software package and fully rolled out to the school on July 1, 2016. Teachers were provided large group training sessions designed to provide information on the functionality of the dashboard. Teachers were also encouraged to participate in departmental training sessions on dashboard usage as it related to their department’s goals.

The dashboard was developed to provide a condensed view of common student-level data that teachers use on a daily basis to monitor performance. This information was intended to guide lesson planning and determine individualized student intervention strategies. The StrongMind LMS captured and aggregated real-time student performance data that was displayed within the dashboard. Teachers were provided with dashboards for each course, displaying the data for each individual student in the course. Whenever a teacher refreshed the dashboard, the most recent information was displayed, meaning that the information presented was based on real-time information at that point in time.

Risk Ranking

A critical aspect of the dashboard functionality was to rank students automatically by academic risk, with students identified as being the most at risk appearing first on the dashboard. To power the risk assessment, the dashboard used a proprietary machine learning model to give each student a risk score. These scores were updated every five minutes, allowing the risk score to reflect a student’s academic risk in close to real time. During the study, the risk scores were then used by the dashboard to sort students in the view, placing students with the highest risk at the top and those with the lowest risk at the bottom of the dashboard.

Disengaged students

Students who completed less than 10% of the course in addition to having an average grade on assignments of less than 15% were identified as disengaged. The total percentage of students who met these criteria were used to determine the disengaged student group and the proportion of disengaged students.

Teachers also had the ability to sort students on their own using any other data point displayed on the dashboard, such as final grade or days since last login. If teachers wished to sort by risk after sorting with another method, they could simply refresh the dashboard to revert to the most current risk sort.

Participants

The dashboard was made available to teachers providing instruction for a total 48 classes in the aforementioned fully online public school. Data was collected for 1,021 students within these classes. To examine the impact of the dashboards, data for these students was compared to historical data from the same school for the previous academic year. Data for the comparison student group was collected at the same school through the same software system as the dashboard group and matched by course subject as well as course start and end date. The dashboard was implemented in 21 different course subjects. The comparison group was identified by matching the course subject and start and end dates with the same course with a similar start and end date the year prior. The comparison group contained student data for 94 classes, representing 2,134 students.

Table 1. Student Grade Counts for Dashboard and Comparison Groups

| Grade | 9 | 10 | 11 | 12 | All |

| Dashboard | 134 | 227 | 210 | 450 | 1021 |

| Comparison | 234 | 495 | 577 | 828 | 2134 |

The dashboard group included 57.4% females and 42.6% males. The comparison group included 56.4% females and 43.6% males.

Data Collection

Student data for the treatment group was collected through the LMS for classes occurring from October 20, 2017 to January 19, 2018. The treatment group was then compared to the course subject equivalents (comparison group) in the prior year. For this study, StrongMind was interested in looking at three types of student academic performance. The first was the student’s final grade in the class, calculated as the weighted sum of all grades on assignments required in a course. The final grade is the grade that ultimately determines if a student passes a course, and it is included in GPA calculations and formally recorded on a student’s record. The next two data points represent aspects of student academic performance that are less frequently reported. One is the percentage of the course completed. In some cases, students may not complete all course content by the end of the term as it is self-paced. Course completion percent is the percentage of the course content that the student has engaged with by the end of the course term. The last measurement is attempted grade. Unlike final grade, attempted grade is not weighted and averages only the grades of assignments the student completed. While course completion percent and attempted grade are both related to final grade, attempted grade speaks more to the quality of the work a student submits.

Results

To examine the effects of the teacher-facing dashboard on student academic outcomes, group means on academic indicators were compared between students attending courses taught by teachers utilizing the dashboard and students attending matched historical courses. Student final grades, course completion percentage, and attempted grade were compared for both significance and effect size. To determine significance, independent samples t-tests were conducted. Findings indicated the dashboard group exceeded the final grades of their historical comparison group by almost 3 points; t(1)=2.38, p=.02, d=.08. Additionally, the dashboard group had significantly higher attempted grades (M=67.47, SD=18.85) than the historical group (M=66.59, SD=33.85); t(1)=-2.08, p=.04, d=.06. There was not a significant difference in the course completion rate between the dashboard group (M=70.05, SD=33.30) and the historical group (M=67.59, SD=33.85); t(1)=-1.76, p=.08.

To address the second research question related to the proportion of disengaged students in the treatment versus control groups, a chi-square test was performed to determine if the percentage of disengaged students differed by group. For the purposes of this study, students were identified as disengaged if they completed less than 10% of the course and averaged a grade of 15% or less on assignments. Findings indicated the percentage of disengaged students did differ by group, X2 (1, N = 3155) = -2.04, p = .04. Cramer’s V was then used to characterize the size of the difference. StrongMind found a 2.62% decrease in the proportion of disengaged students in the dashboard group (V = 0.03), which was significant.

Discussion

In this study, limitations to previous research are addressed by investigating the relationship between the use of a teacher-facing dashboard and student academic outcomes. As a result, findings fill important gaps in the literature by providing evidence for the assumption that this type of tool can be utilized to improve teaching efforts, and ultimately, improve student academic outcomes. This report summarizes findings that demonstrate a dashboard, specifically created to present teachers with the student data needed to provide timely interventions and organize lesson plans, had two positive effects for students.

Students in the treatment group were found to outperform their peers in the control group in final grade and mean grades on the assignments they attempted. The treatment group was also found to have a significantly smaller proportion of disengaged students. While there was not a significant impact on course completion rates across the treatment and control groups, future studies may address the development of teacher tools and resources that can positively affect course completion.

This work differs from previous research on teacher dashboards in two ways. First, it is one of the few investigations that melds machine learning into a dashboard-focused application. Prior work involving machine learning with educational data focused primarily on predicting student pass/fail, withdrawal, and retention (Vitiello et al., 2017). Second, the study focuses on the impacts the teacher dashboard has on student academic performance. Prior dashboard studies have, for the most part, looked primarily at teacher usage and implementation. Few have focused on the impact of teacher dashboards on student academic performance.

Limitations

This was a non-experimental study, with findings based on correlational patterns between variables. Although the analyses imply that the use of the teacher dashboard significantly improved the final and attempted grades of students, causality cannot be proven by correlation. Furthermore, the study did not include mechanisms for tracking how teachers specifically interacted with the dashboard’s risk sort feature, or the interventions and course adjustments made by teachers. These remain potential future areas of research that would lend insight to these findings and further contribute to the literature.

References

Molenaar, I. & Knoop-van Campen, C. (2017). Teacher Dashboards in Practice: Usage and Impact. 125-138. 10.1007/978-3-319-66610-5_10.

Mottus, Alex & Kinshuk, Dr & Graf, Sabine & Chen, Nian-Shing. (2015). Use of Dashboards and Visualization Techniques to Support Teacher Decision Making. 10.1007/978-3-662-44659-1_10.

Rodríguez-Triana, M. J., Prieto, L. P., Vozniuk, A., Boroujeni, M. S., Schwendimann, B. A., Holzer, A., & Gillet, D. (2017). Monitoring, Awareness and Reflection in Blended Technology Enhanced Learning: A Systematic Review. IJTEL, 9(2–3), pp. 126–150.

Shalev-Shwarts, S. & Ben-David, S. (2014). Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press. New York, NY.